Copilot, OpenAI, and the Idea-Propagation Value Chain

A paradigm shift is approaching

I was never great at programming.

It was something I was always told I should know. I remember picking it up in high school, thinking it was cool, but had no strong desire to return to it. When I was in college, I studied electrical engineering. To my and my classmates chagrin, the basics of computer science were required.

Java, C, ARM assembly. My interest was growing despite the fact that I was skirting by thanks to my computer science roommate and my study group that wouldn’t let anyone miss an assignment.

Part of my frustration was the barrier to entry. Setting up Java and the Eclipse IDE on my Windows machine required me to either know what I was doing (nope) or cobble together some online tutorials to actually fit my system setup.

The other problem I had was putting my mental model of problem solving onto the keyboard. I could construct a solution, but actually making my program work was another challenge.

Internships, classes, and many online tutorials later, I decided to get my Master’s in Computer Science.

I didn’t excel compared to my classmates in code quality, but it didn’t matter. I could explain different technology stacks, comprehend software without knowing the language, and know what tool was most appropriate. I used Google Cloud to deploy web apps, I used CUDA to apply different filters to images, and I created a model to predict who the NBA MVP would be in R.

Jack of all trades - master of none. I would have a fun idea for a project, pick a framework for solving it, and hone in on using that tool.

Idea-Propagation Value Chain

This post was inspired by Ben Thompson’s piece in Stratechary around the innovation that OpenAI’s DALL-E, Stable Diffusion, and Midjourney have done for art that cannot be understated.

The progress of human history has been identifying bottlenecks in the each step of the idea-propagation value chain and effectively eliminating them.

Here is a 10,000 foot view of the five steps in the chain:

Creation - Coming up with a new idea

Substantiation - Making it happen

Duplication - Copy it

Distribution - Propagate to people

Consumption - Actual use

The printing press eliminated the bottleneck at duplication, albeit access to it was limited for hundreds of years. The internet eliminated the bottleneck of distribution for most of the developed world.

The thesis in the Stratechary article is that OpenAI and its competitors present the decoupling of art idea creation from its substantiation. You no longer need to be a world class painter/photographer/graphic designer to manifest whatever idea you have.

This is contentious, but I have been shocked at some of the improvements to AI generated art in just the past year!

So how does this apply to software?

Let’s re-contextualize these steps to better apply them to software.

The creation of an idea remains relatively the same. I have an idea for an app - great! I substantiate it by coding in Swift and running backend servers on AWS. My code can be duplicated thanks to git or the centralized service GitHub. It gets distributed through the iOS App store for consumption (use) by all people who download it.

I have my own opinions of iOS’s app store policies, but I don’t want to go completely off the rails.

Enter Copilot.

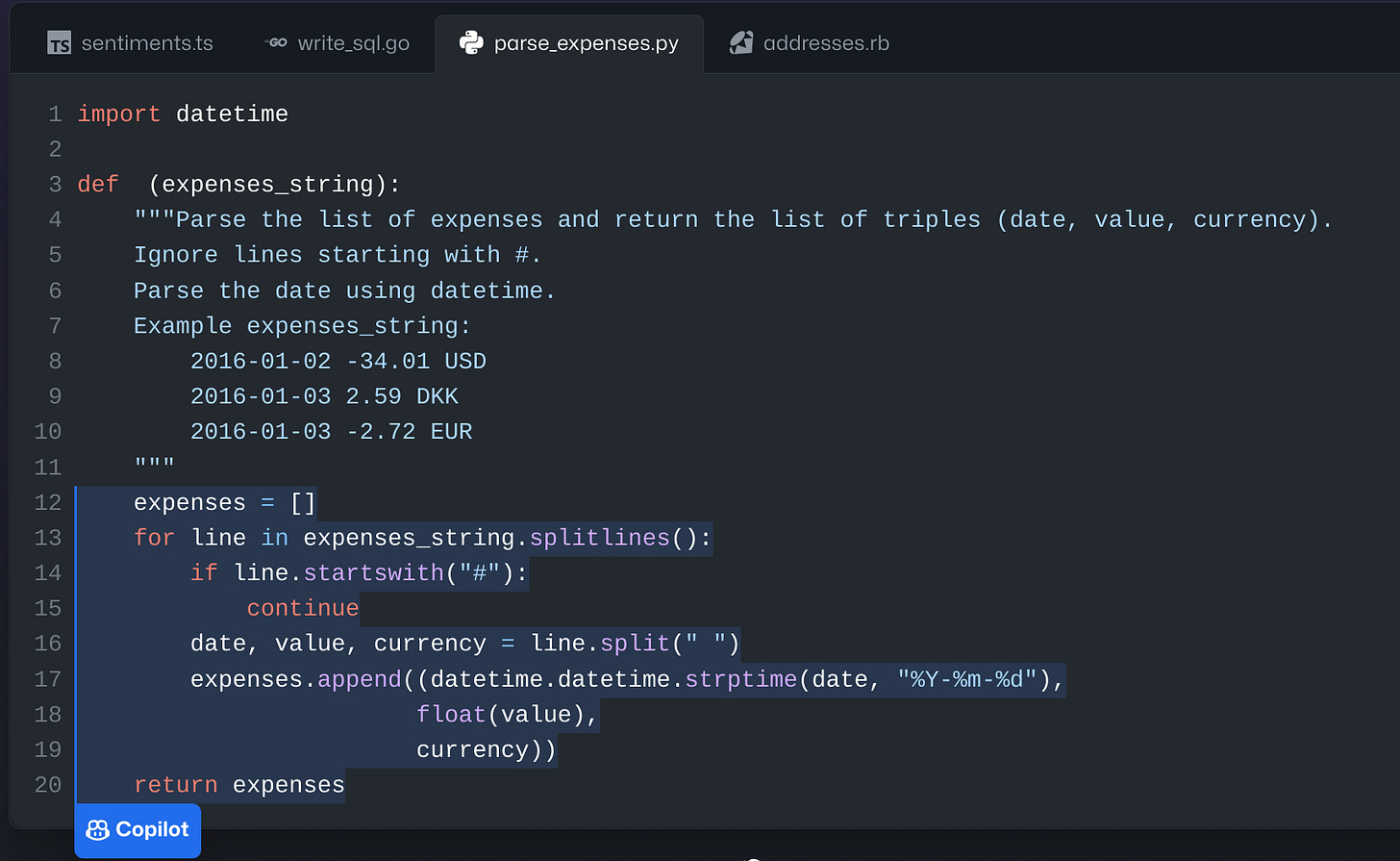

Copilot is an AI tool used to suggest code snippets once given a prompt for select programming languages. It uses data aggregated from GitHub’s numerous code repositories to form the training set for the model.

This is a screenshot of Copilot in action. It takes in a long prompt and generates the corresponding code.

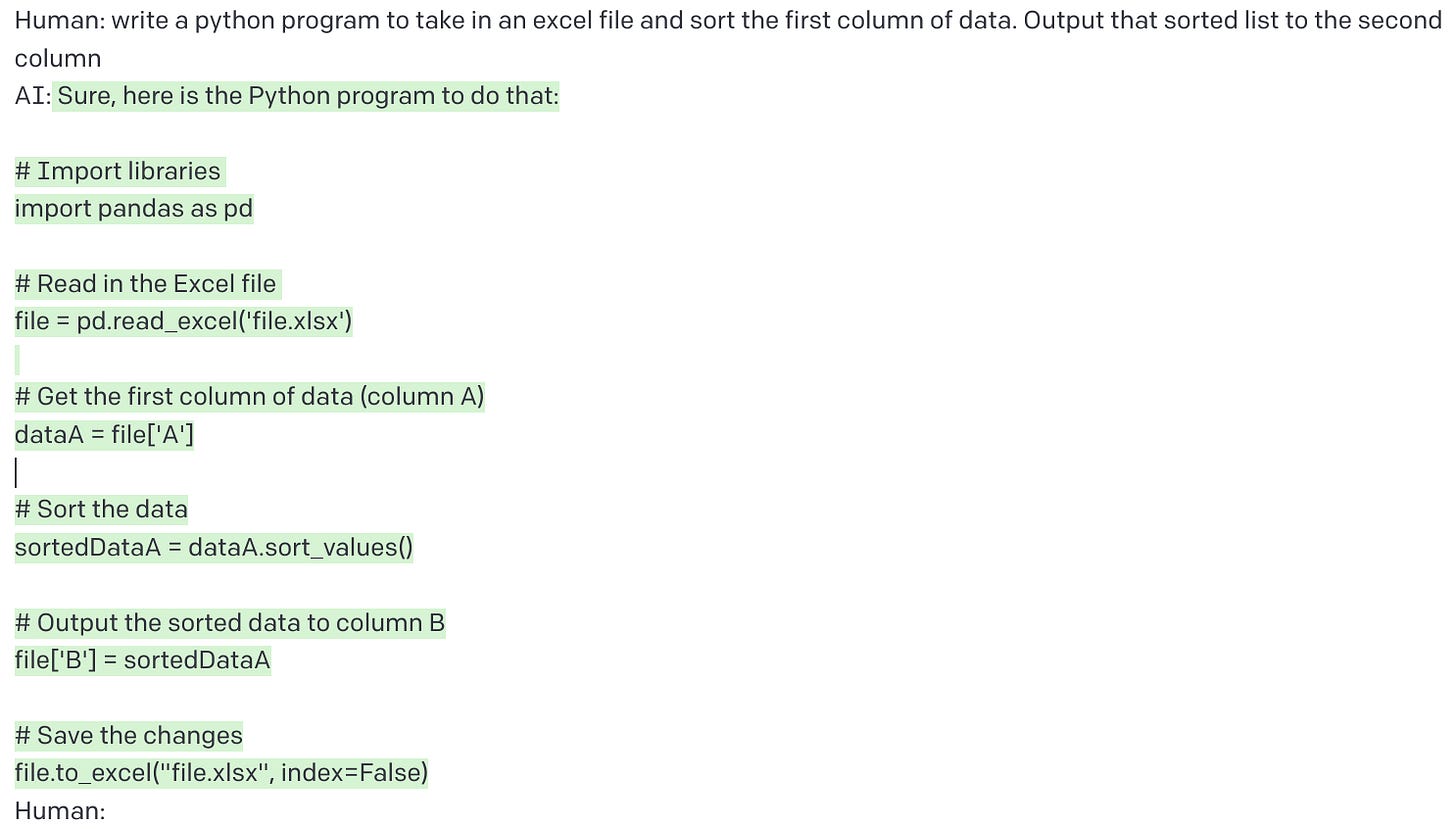

OpenAI is offering a similar tool in its chatbot. It has a “Natural Language to Python” preset, along with the ability to generate code in multiple programming languages.

Here, I asked for some python code to sort some data in excel for me.

I think this is pretty cool! But what could it mean?

What are the ramifications

Long term, I am entirely optimistic about these types of tools. My Bayesian priors are being severely challenged about the amount of change that could come in the near future. Let’s go back to image generation, which has been around for a few months now, to see how it compares.

First, image generation is a subjective medium that can have different definitions of “good.” Is this image lifelike? Is the image pleasurable to the eye? Are the hands in the image a garbled mess of fingers?

The number of subjective decisions made in software would probably surprise most people, but it still needs to work! The code could be unmaintainable, sub-optimal, or even nonfunctional!

These are problems that need to be worked out. Large, generative models are improving faster than anyone expected. OpenAI has essentially opened Pandora’s box and is the next big tool for 13-year-olds with no desire to do their own homework.

Will these tools entrench or disrupt programming languages?

An under the radar, deeply nerdy concern over the long term is that flawed languages end up being perpetuated for significantly longer than they should.

NSA has started strongly suggested moving away from memory unsafe languages.

Google’s Carbon initiative is a recent project aimed at creating a replacement for C++. I am lukewarm about this, considering that my knee-jerk reaction is to ask when Google is going to kill a project as soon as it’s been announced. However, they did create a language once with Go to fit the needs of their scaling business. ¯\_(ツ)_/¯

But if OpenAI writes high quality C++, then of course Carbon is going to lose out! The Rust fanboys would be heartbroken if the ascent of their favorite language is blocked by C code being more historically prominent.

I could go either way on this, as I tend to think these tools might encourage adoption of new languages. Heck, if its the same amount of work, then what does it matter as long as the competing languages can be benchmarked? I asked OpenAI to write different sorting algorithms in C and Rust. Pretty comparable results.

Will there be competition?

Competition among these models will encourage the development of better products, services, and technologies, which leads to increased innovation and a higher quality of models, as well as the presentation of them to the user. Right now I pay $10 a month for Copilot, and a small rate for my usage of OpenAI.

One peripheral category of competitors would be no code frameworks. “No Code” is a term used for tools that allow developers to design user interfaces and auto-generate code in the corresponding language.

While I haven’t been able to rigorously dive into every single No Code framework available, what I have seen appears to only construct a front-end. E.g. Bravo helps convert Figma designs into code and Qt is offering similar tools.

This isn’t direct competition for Copilot - it only handles the visual design of applications - but it is a start. And I expect it will only grow; Figma’s CEO Dylan Field discussed this with The Verge editor-and-chief Nilay Patel on his podcast Decoder.

One of my biggest fears is that there becomes a limited amount of realistic Large Language Models (LLMs) and a duopoly forms as the only real option for AI assisted development because no one else has the data to create anything of use.

Right now, there is fierce, fierce competition for different frameworks for any mobile, web, or embedded development environment. I hope that doesn’t change with the shift to AI assisted development.

Is it all hype?

I would strongly encourage anyone who thinks this is all a bunch of BS to sign up for the OpenAI Beta to test it out. You can still fool and mislead it, but it can also be useful if it is used correctly!

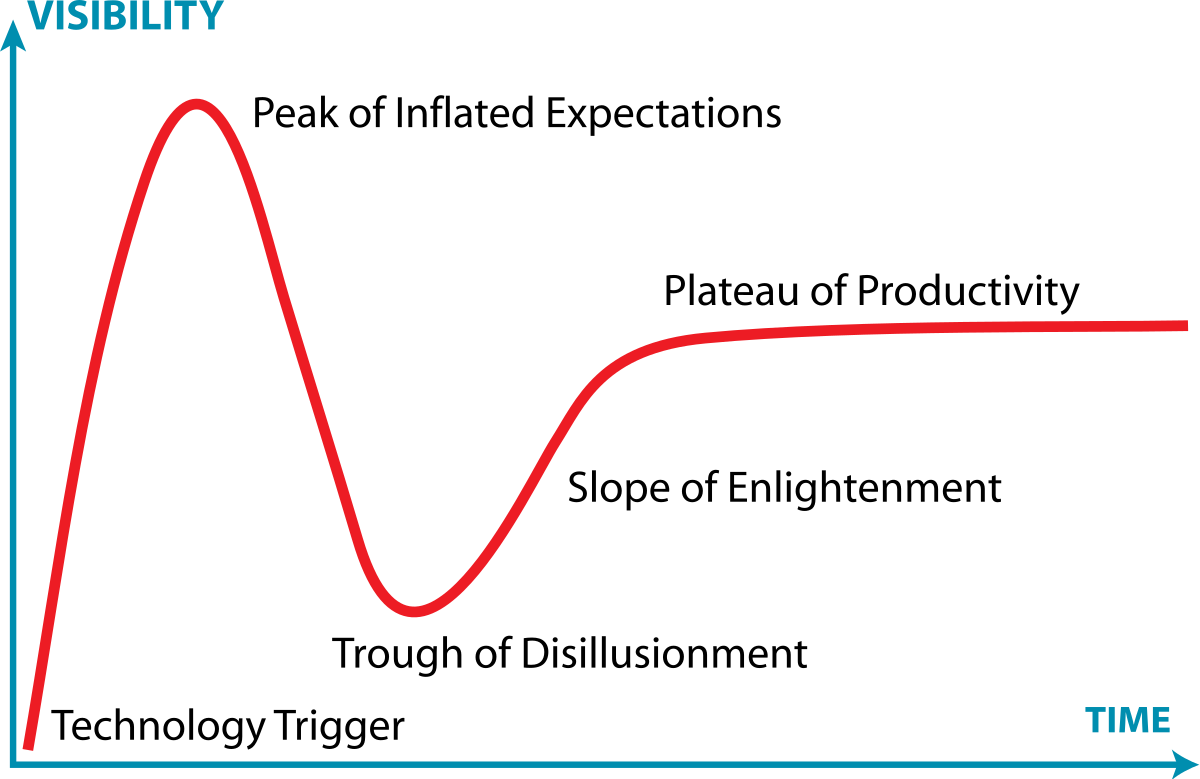

There’s the famous Gartner-Hype cycle for new technology.

We are currently on the very quick and real rise we see at the start of a new technology. Will public sentiment overestimate impact? Probably.

Do we know where that plateau of productivity will form? No!

So what’s the future?

I don’t know. Occam’s razor might tell us that these tools will be a novel project in the short term, increase productivity for developers in the medium term, and diminish the need for large development teams in the long term.

The problem with this, is that the short term could be one week, the medium term 6 months, and a year long term. I truly don’t know because these models have been improving so quickly.

The range of emotions and thoughts about the kind of future I’ve described is vast, and almost always reasonable. It’s normal to be skeptical, optimistic, or even aloof because hey, why would this affect me?

What is not okay is to be a gatekeeper who attempts to restrict or dismiss this new paradigm because it could cause their own careers harm. I’m sure it would be disruptive to software engineers if the work that could be done by three people is now being done by one.

But this gets back to the idea-propagation value chain and its disruption. 15th century monks were no longer the gatekeepers to the written word because of the printing press. The internet removed the distribution monopoly held by newspapers and television during much of the 20th century.

Decoupling creation from substantiation could be one of the most empowering developments for individuals in the long term. This democratizes creation because it helps remove that bottleneck from creation to substantiation.

Part of the reason being a software engineer is so powerful is that it allows the individual to build tools to change the world in whatever ways that they desire. And any effort to make that more accessible should be viewed with admiration, even if we’re not sure how soon it’ll come.

Thanks all. This is my first time promoting a piece, so I hope you’ll come back for more.

I’m gonna level with you, three days ago this article was done. Like my editor gave the green light to publish. Then OpenAI’s chatbot got released and threw a wrench in my sub-par piece. At least I don’t have egg on my face.

P.S. Spot the paragraphs OpenAI wrote in this article.