Apple's Private Cloud Compute Security

I am surprisingly delighted

About a month ago, Apple unveiled Apple Intelligence as it’s AI strategy going forward. Apple Intelligence has a few different pieces. First is on device models coming to iPhone 15 Pro Max and newer models. These AI models will have context to all your personal information integrated into the iPhone - a key differentiator against Gemini and OpenAI.

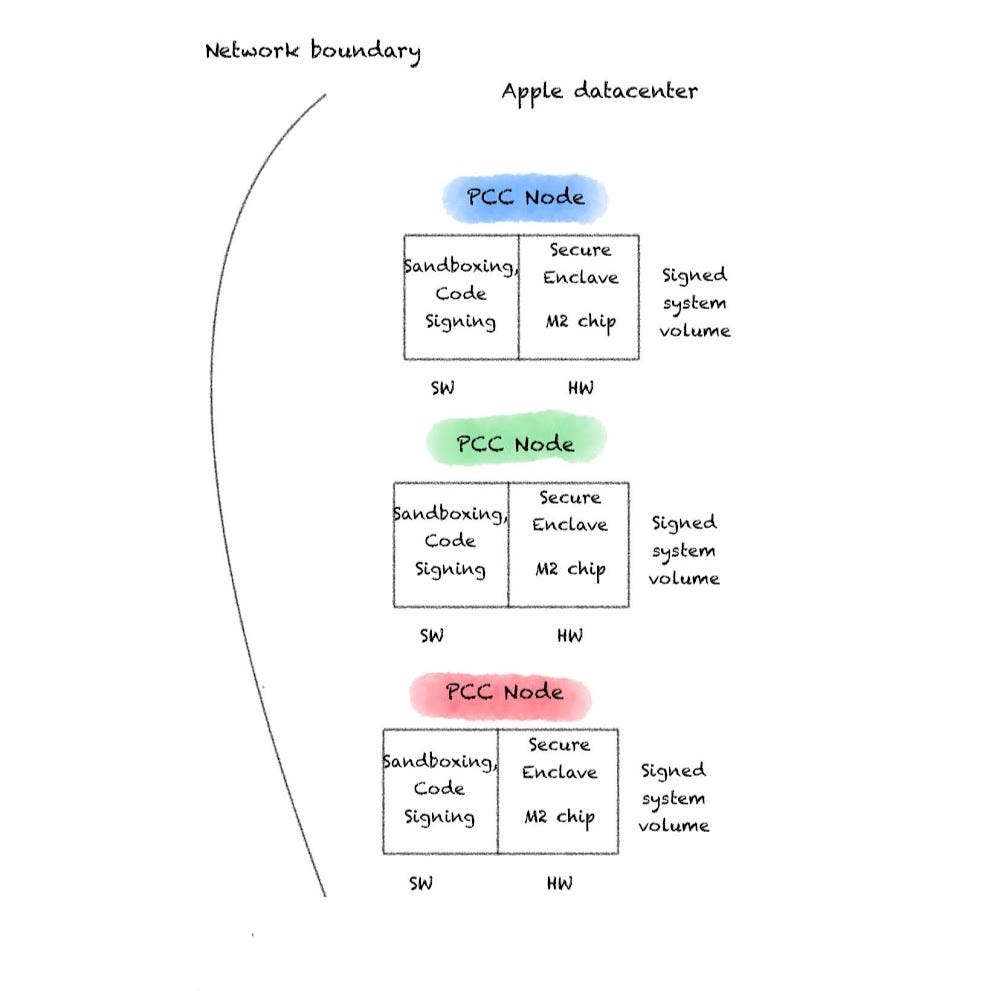

For more computationally expensive requests, processing will be offloaded to Apple’s Private Cloud Compute (PCC), which as far as I can tell is a suite of Apple hardware with M2 chips in their own private data center. The PCC will securely receive a request, run the appropriate model with the query, and return an answer.

Lastly, for requests not suited to Apple’s AI models, third party integrations will be available to utilize OpenAI (and eventually others).

This is a bit of a strategic shift from Apple’s previous positions. The iPhone is still the center of the ecosystem, but there is now the acknowledgement that iPhones do not have the compute power to do everything on device. Apple has previously tweaked its operating system to allowing better integration with Midjourney - so Apple has clearly indicated it values on device inference.

This isn’t groundbreaking, but more a recognition that until inference on these models becomes efficient enough for mobile devices to perform, a hybrid scheme will be the path forward. Models that inference locally, like Midjourney for image generation, are far less costly than current LLMs. That has changed over the course of the past few years. So maybe when Apple announces the iPhone 19, PCC will be a backup for certain circumstances (battery life) or strenuous request.

Until then, I want to highlight and breakdown a whitepaper Apple put out on the security and privacy features of its PCC. Apple already had my attention, but I was absolutely thrilled to understand the security architecture.

What are Apple’s Claims?

Apple’s stated security and privacy design goals are the following:

Stateless computation on personal user data - the only data access available to model will be provided in the prompt, no other data about the user will be available.

Enforceable guarantees - all security and privacy claims have a technical underpinning, and not a pinky promise of “I swear we won’t use this data another way”

No privileged runtime access - PCC nodes should not have traditional access mechanisms of admin users onto the system

Non-targetability - limit attack reach so that individual nodes/users can’t be attacked and if there is a compromise, only the local data of the compromised component is exposed

Verifiable transparency - third party security reviews (of selected parts of the system)

Apple also claims:

This is an extraordinary set of requirements, and one that we believe represents a generational leap over any traditional cloud service security model.

These are a great set of requirements, and in some ways do raise the bar, probably not a generational leap. I will need to revisit this claim in the end.

Assumptions

I’ll state when there is a gap what Apple is saying, and what assumptions I have to make with it. Part of the reason Apple can be more transparent in its whitepaper is that the security promises of the design assure for maximal security and privacy barring implementation failures.

Explaining the system

Apple starts with the server side hardware. They emphasized over and over again they this would be their own hardware - so unless we’re all massively mistaken, there won’t be any Nvidia or AMD chips in sight. Apple also states Secure Enclave, secure boot, and application sandboxing out of the gate because of Apple’s hardware and software architecture. Code signing of the application models and signing of the system volume is also done.

I don’t want to skip over these details, but this is not revolutionary from a security perspective. Apple is following its own best practices and this does require the necessary hardware to be present, but this is an easy win when Apple is using their own hardware. It would be strange for the security features present on their consumer hardware devices to not be present on their servers. However, by Apple filling its datacenter with its own hardware, it will enable security functionality that would otherwise take more effort to match.

The next section of the article discusses how Apple wants to achieve stateless computation. Essentially, Apple does not want to retain any user data - or even have the possibility of access to it. What?

How will Apple not have access to user requests? End-to-end encryption between a user’s device and the PCC node. PCC nodes are issued a signed certificate in manufacturing, by one of Apple’s trusted authorities. This PCC node will store its certificates in its Secure Enclave, and make the public certificate available to a gateway that will be handling load balancing.

This can’t be done in a normal cloud architecture - but it’s a double edged sword. By designing the system not to run on virtualized hardware, scaling capacity is a concern. There isn’t the option to spin up more VMs to meet demand - you’re hard capped by physical capacity. But by having Apple’s tightly integrated hardware in the datacenter is also what enables them to securely implement end-to-end encryption between a user and a PCC node. Apple does not specify it’s encryption scheme - I’ll make the assumption that it is likely a hybrid encryption scheme with a shared one-time secret being derived between keys ephemerally generated by a user and the signed PCC node’s certificate keys. An example of this would be ECIES.

So we’ve taken one step forward in terms of privacy. If this would be all they did, I’d be a little snarky and surprised about putting out a whole whitepaper to tell us they don’t retain any data in a request and implemented end-to-end encryption.

No debug?

Then Apple said something I hadn’t excepted when I read one of their initial design goals was no runtime access. THis is the key party about access that can give people better confidence that Apple will not be able to access it.

We designed Private Cloud Compute to ensure that privileged access doesn’t allow anyone to bypass our stateless computation guarantees.

They go in to explain that their intention is that no admin should be able to have direct access to the server. Instead of remoting into an EC2 instance to debug - only a limited set of commands will be available to retrieve information from the node, all of which will be under strict audit control.

I find this fascinating from a debugging perspective. Obviously the logging interface can be designed strictly enough to only allow a limited set of information to be retrieved. I hope so, otherwise I wouldn’t want to be a server engineer with Apple when I have a model hallucinating on users.

oHTTP

Apple then talks about the lengths to which it will go to make sure that the system cannot have targetability - in either direction. Obfuscation of user IP address is achieved through an oHTTP server - which can essentially blindly forward encrypted traffic through a relay.

For a more in depth explanation. The client makes a request intended for a resource server - in this case our PCC node. It then encapsulates that request in another request to the relay server, which contains meta information about where it should be sent. The gateway will then route the request to the assigned PCC node, which we know is then decrypted and a response is generated. This same process is then repeated in reverse - with the oHTTP server knowing which client the response should be forwarded back to.

This distinctions are semantic from a VPN, but oHTTP is designed to protect privacy of the client from the server. Meta information contained in the request - like a user’s IP address - is kept hidden.

I argue all the time with people about the sensitivity of a user’s IP address. I argue that it is almost never valuable to anyone outside of a few niche cases but I digress.

Transparency

There are a handful of other security controls taken. The only one I’ll zoom in on is the promise of third party reviews by security researchers.

When we launch Private Cloud Compute, we’ll take the extraordinary step of making software images of every production build of PCC publicly available for security research. This promise, too, is an enforceable guarantee: user devices will be willing to send data only to PCC nodes that can cryptographically attest to running publicly listed software.

I think this is the right approach for a few reasons. First, the most secure system is the one with the most eyes examining and improving it. If there’s a security flaw, it will be found. Second - the valuable IP is the models, not the security apparatus. Most companies wouldn’t want to commit to this level of security, let alone copy it.

Lack of scaling implications

One point that caught my attention was that clients would be presented a cluster of PCC nodes (and presumably their certificates) to then be able to send requests to. This seemed to go against the non-targetability design goal. The more I read, the more I think that a single request will actually be encrypted for a cluster of different PCC nodes, and then the gateway will match the request to a node it chooses based on expected load.

I thought about this for a day or so - rereading to make sure this wasn’t explained elsewhere - but then I realized this makes sense in a non-virtualized environment. Apple won’t have the benefit of spinning up more VMs to meet the request demand. So instead of anticipating capacity for a specific node, Apple gets around it by having a request technically be made for a cluster and only one PCC node will fulfill it.

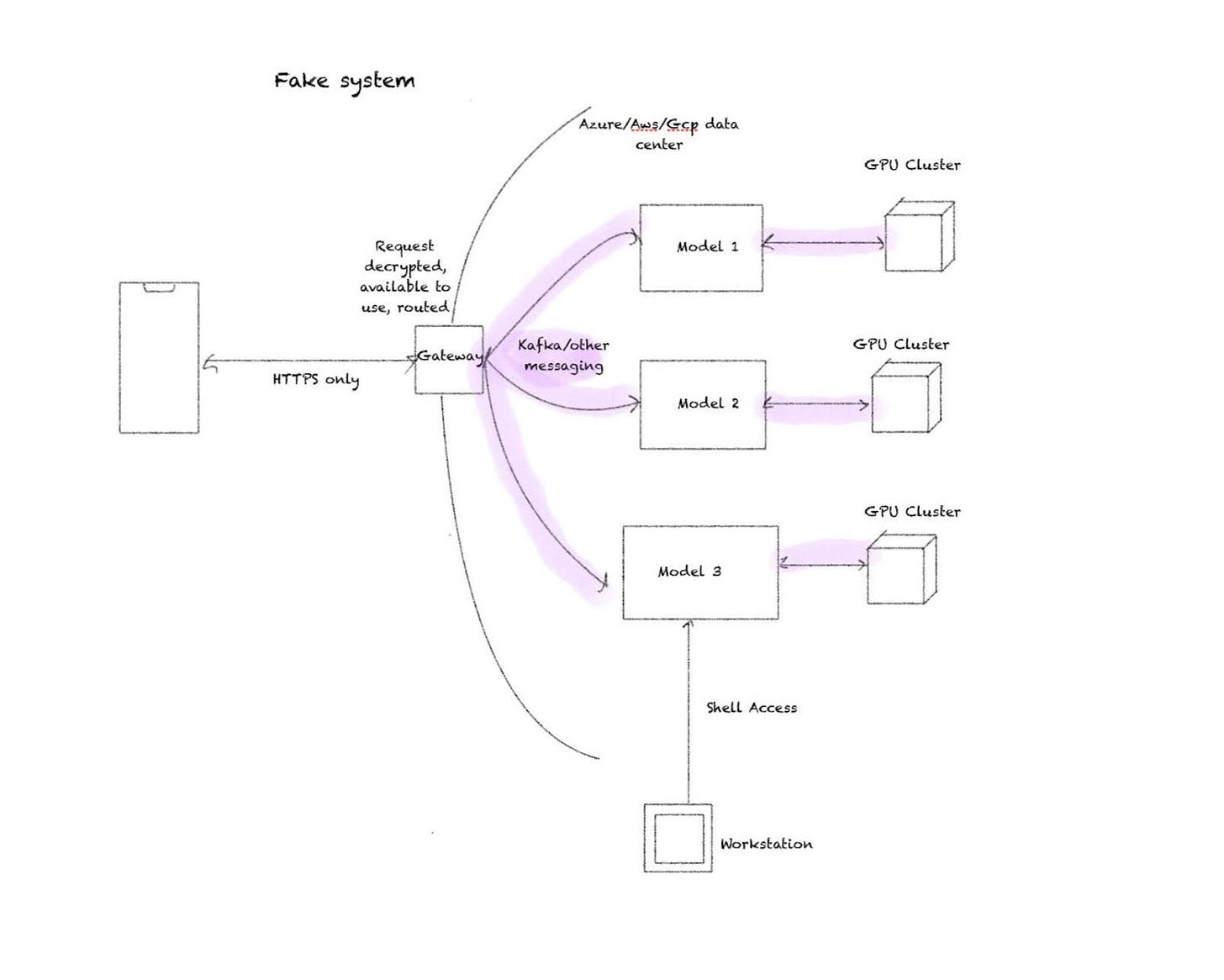

What would an equivalent system do?

Before finishing up, I think it would be useful to examine what a similar system without Apple’s privacy design philosophy might look like.

Essentially, a request will initially be encrypted with HTTPS and sent to a gateway/ Most HTTPS sessions terminate at a gateway - where the message is decrypted, before being forwarded onto a queue for models waiting to access the necessary GPU resources. This messaging will probably be handled through a messaging service like Kafka - which will encrypt the traffic by default but still needs to be configured correctly.

GPU clusters can’t be spun up, but AWS, GCP, Azure, Meta, etc. have all made heavy investments in GPU acquisition - which outside of being a boon to Nvidia - also means that capacity can generally be met - particularly for lower demand models. Access might be throttled based on a usage or token limit on a per user basis. In the little over 18 months since ChatGPT started to capture public eye - capacity and model performance has drastically improved.

I have strong doubts that Apple’s hardware will even be able to match last generations H100 in performance - but I wouldn’t be surprised if I was proven wrong.

Evaluation

There’s a lesson I’ve learned from religiously reading Ben Thompson over the years about why technical people might say “no” to something. I’ll paraphrase:

“No” can mean “that’s not possible”. “No” can mean “I don’t want to do that”. And finally, “No” can mean “You don’t understand the repercussions of what you’re asking for”.

If I’m asking someone at Apple if they want to be able to access user data on its PCC nodes, their answer can probably be surmised as “No - I don’t want to, we designed it to not be technically possible, and you don’t understand the repercussions of wanting access to that data”

There is still a lot I need to learn to understand about the inner workings of this system, but I had a joy learning about the intricacies and thinking through why design choices might be made. There are plenty of other anecdotes I would have loved to talked more about, but I’ll leave it here for now. I officially have been dunked on for rolling my eyes earlier at Apple’s claimed generational leap in cloud security model.

I’ll posting a follow up on the wider implications of this system design and why Apple may have decided to go with this approach. Thank you.